12 posts found

AI Data Readiness: Preparing Data for Artificial Intelligence

Over the last few years we have seen spectacular advances in the use of artificial intelligence (AI) and, behind all these achievements, we will always find the same common ingredient: data. An illustrative example known to everyone is that of the language models used by OpenAI for its famous ChatGP…

Data Sandboxes: Exploring the potential of open data in a secure environment

Data sandboxes are tools that provide us with environments to test new data-related practices and technologies, making them powerful instruments for managing and using data securely and effectively. These spaces are very useful in determining whether and under what conditions it is feasibl…

New Year's resolution: Apply the UNE data specifications in your organisation

As tradition dictates, the end of the year is a good time to reflect on our goals and objectives for the new phase that begins after the chimes. In data, the start of a new year also provides opportunities to chart an interoperable and digital future that will enable the development of a robust data…

Application of the UNE 0081:2023 Specification for data quality evaluation

The new UNE 0081 Data Quality Assessment specification, focused on data as a product (datasets or databases), complements the UNE 0079 Data Quality Management specification, which we analyse in this article, and focuses on data quality management processes. Both standards 0079 and 008…

UNE 0081 Specification - Data Quality Assessment Guide

Today, data quality plays a key role in today's world, where information is a valuable asset. Ensuring that data is accurate, complete and reliable has become essential to the success of organisations, and guarantees the success of informed decision making.

Data quality has a direct impact not only…

FAIR principles: the secret of the data wizards.

Books are an inexhaustible source of knowledge and experiences lived by others before us, which we can reuse to move forward in our lives. Libraries, therefore, are places where readers looking for books, borrow them, and once they have used them and extracted from them what they need, return them.…

Free tools to work on data quality issues

Ensuring data quality is an essential task for any open data initiative. Before publication, datasets need to be validated to check that they are free of errors, duplication, etc. In this way, their potential for re-use will grow.

Data quality is conditioned by many aspects. In this sense, the Aport…

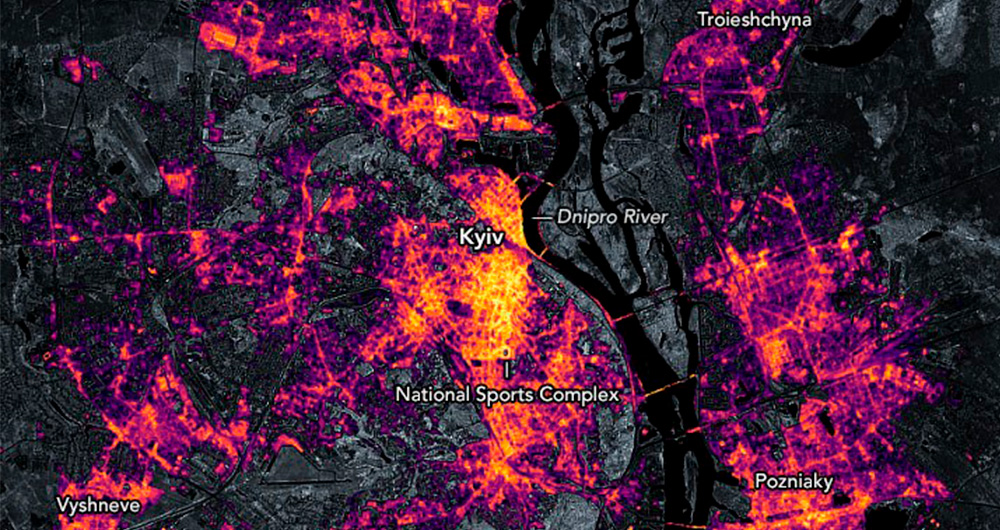

Collecting and analysing data to improve humanitarian assistance and restore damage during the Ukrainian war

On 24 February Europe entered a scenario that not even the data could have predicted: Russia invaded Ukraine, unleashing the first war on European soil so far in the 21st century.

Almost five months later, on 26 September, the United Nations (UN) published its official figures: 4,889 dead and 6,263…

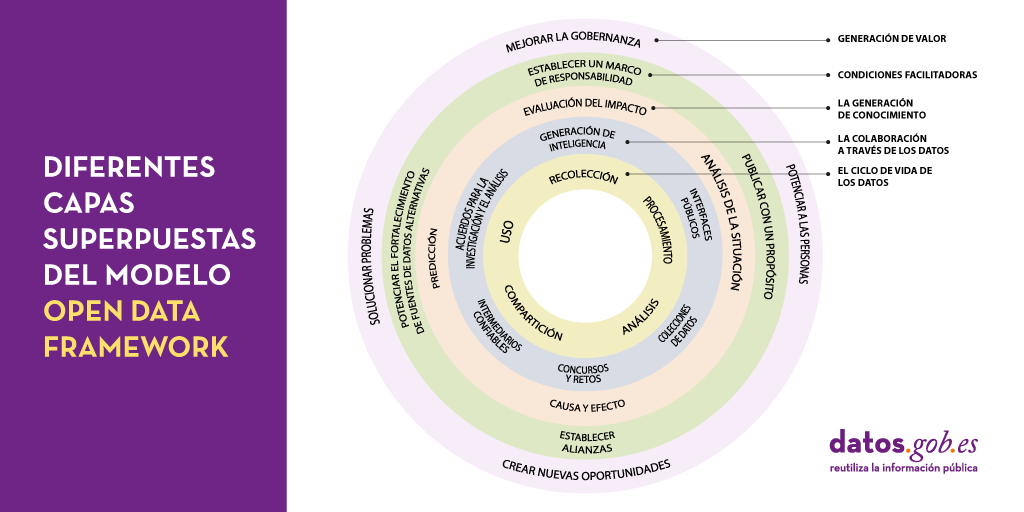

Peeling the onion of open data governance

One of the key actions that we recently highlighted as necessary to build the future of open data in our country is the implementation of processes to improve data management and governance. It is no coincidence that proper data management in our organisations is becoming an increasingly complex and…

Technical Standards to achieve Data Quality

Transforming data into knowledge has become one of the main objectives facing both public and private organizations today. But, in order to achieve this, it is necessary to start from the premise that the data processed is governed and of quality.

In this sense, the Spanish Association for Standardi…