10 posts found

AI tools for research and a new way to use language models

AI systems designed to assist us from the first dives to the final bibliography.

One of the missions of contemporary artificial intelligence is to help us find, sort and digest information, especially with the help of large language models. These systems have come at a time when we most need to mana…

Open data for a better understanding of the housing situation in Spain

Housing is one of the main concerns of Spanish citizens, according to the January 2025 barometer of the Centro de Investigaciones Sociológicas (CIS). In order to know the real situation of access to housing, it is necessary to have public, updated and quality data, which allows all the actors in thi…

Open data to promote healthy ageing

Data on older people can play a crucial role in promoting healthy ageing, assisting the development and maintenance of the physical and mental capacities that enable well-being in old age. This open data can be used for the development of policies to better respond to the needs of older people, such…

Open source auto machine learning tools

The increasing complexity of machine learning models and the need to optimise their performance has been driving the development of AutoML (Automated Machine Learning) for years. This discipline seeks to automate key tasks in the model development lifecycle, such as algorithm selection, data process…

Re3gistry: facilitating the semantic interoperability of data

The INSPIRE (Infrastructure for Spatial Information in Europe) Directive sets out the general rules for the establishment of an Infrastructure for Spatial Information in the European Community based on the Infrastructures of the Member States. Adopted by the European Parliament a…

Vinalod: The tool to make open datasets more accessible

Public administration is working to ensure access to open data, in order to empowering citizens in their right to information. Aligned with this objective, the European open data portal (data.europa.eu) references a large volume of data on a variety of topics.

However, although the data belong to di…

Free tools to work on data quality issues

Ensuring data quality is an essential task for any open data initiative. Before publication, datasets need to be validated to check that they are free of errors, duplication, etc. In this way, their potential for re-use will grow.

Data quality is conditioned by many aspects. In this sense, the Aport…

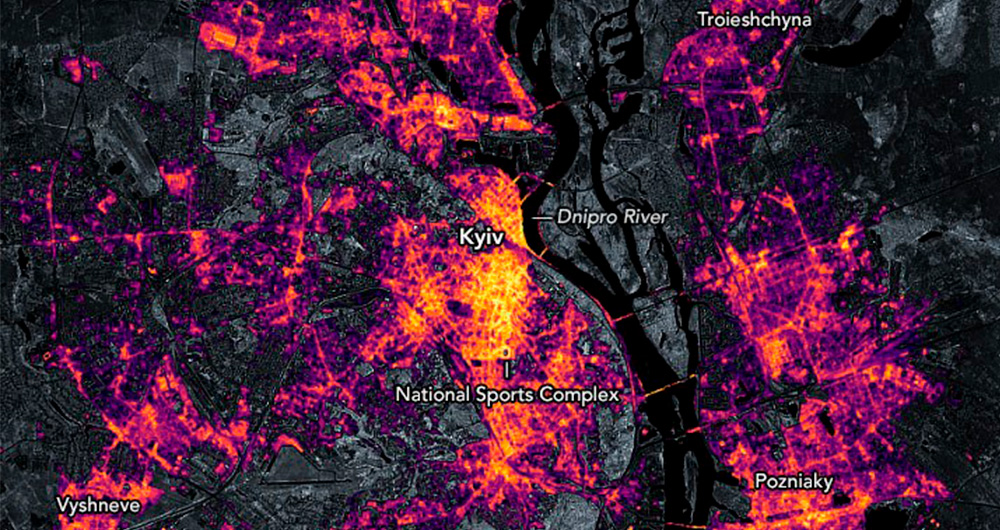

Collecting and analysing data to improve humanitarian assistance and restore damage during the Ukrainian war

On 24 February Europe entered a scenario that not even the data could have predicted: Russia invaded Ukraine, unleashing the first war on European soil so far in the 21st century.

Almost five months later, on 26 September, the United Nations (UN) published its official figures: 4,889 dead and 6,263…

API Friendliness Checker. A much needed tool in the age of data products.

Many people don't know, but we are surrounded by APIs. APIs are the mechanism by which services communicate on the Internet. APIs are what make it possible for us to log into our email or make a purchase online.

API stands for Application Programming Interface, which for most Internet users means no…

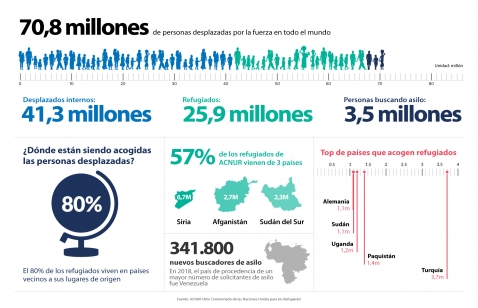

How open data can help in the refugee crisis

According to the United Nations Agency for Refugees (UNHCR), we are currently witnessing the highest levels of displacement of people registered in recent history. In 2019, it is estimated that more than 70 million people have been forced to leave their homes, including 25.9 million legal refugees,…