11 posts found

AI tools for research and a new way to use language models

AI systems designed to assist us from the first dives to the final bibliography.

One of the missions of contemporary artificial intelligence is to help us find, sort and digest information, especially with the help of large language models. These systems have come at a time when we most need to mana…

PET technologies: how to use protected data in a privacy-sensitive way

As organisations seek to harness the potential of data to make decisions, innovate and improve their services, a fundamental challenge arises: how can data collection and use be balanced with respect for privacy? PET technologies attempt to address this challenge. In this post, we will explore what…

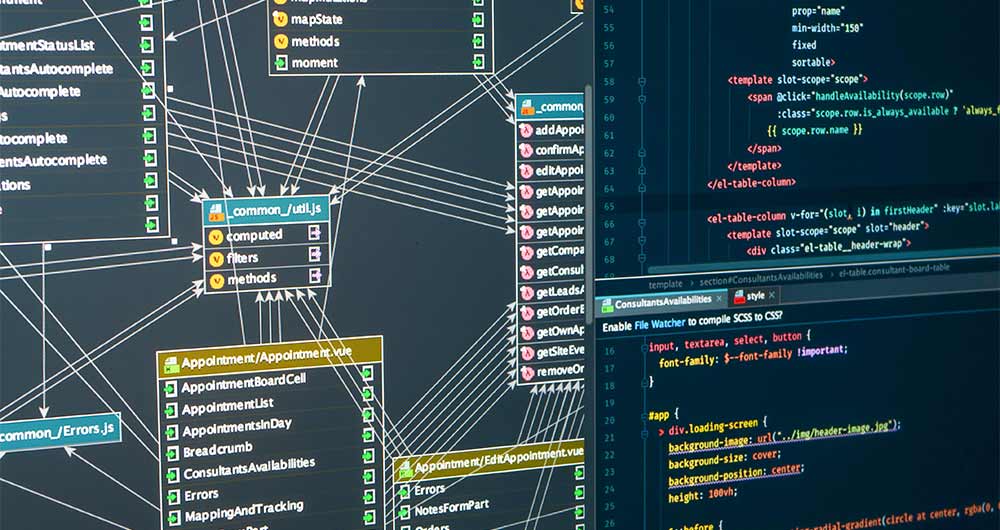

Open source auto machine learning tools

The increasing complexity of machine learning models and the need to optimise their performance has been driving the development of AutoML (Automated Machine Learning) for years. This discipline seeks to automate key tasks in the model development lifecycle, such as algorithm selection, data process…

Discovering the Digital Product Passport (DPP) and CIRPASS: A Look into the Future of the Circular Economy

Digital transformation has reached almost every aspect and sector of our lives, and the world of products and services is no exception. In this context, the Digital Product Passport (DPP) concept is emerging as a revolutionary tool to foster sustainability and the circular economy. Accompanied by in…

Re3gistry: facilitating the semantic interoperability of data

The INSPIRE (Infrastructure for Spatial Information in Europe) Directive sets out the general rules for the establishment of an Infrastructure for Spatial Information in the European Community based on the Infrastructures of the Member States. Adopted by the European Parliament a…

Vinalod: The tool to make open datasets more accessible

Public administration is working to ensure access to open data, in order to empowering citizens in their right to information. Aligned with this objective, the European open data portal (data.europa.eu) references a large volume of data on a variety of topics.

However, although the data belong to di…

Free tools to work on data quality issues

Ensuring data quality is an essential task for any open data initiative. Before publication, datasets need to be validated to check that they are free of errors, duplication, etc. In this way, their potential for re-use will grow.

Data quality is conditioned by many aspects. In this sense, the Aport…

The protection of personal data in the draft Data Governance Regulation (Data Governance Act)

Since the initial publication of the draft European Regulation on Data Governance, several steps have been taken during the procedure established for its approval, among which some reports of singular relevance stand out. With regard to the impact of the proposal on the right to the protection of pe…

API Friendliness Checker. A much needed tool in the age of data products.

Many people don't know, but we are surrounded by APIs. APIs are the mechanism by which services communicate on the Internet. APIs are what make it possible for us to log into our email or make a purchase online.

API stands for Application Programming Interface, which for most Internet users means no…

The role of open data and artificial intelligence in the European Green Deal

Just a few months ago, in November 2019, Ursula von der Leyen, still as a candidate for the new European Commission 2019-2024, presented the development of a European Green Deal as the first of the six guidelines that would shape the ambitions of her mandate.

The global situation has changed radical…