14 posts found

AI tools for research and a new way to use language models

AI systems designed to assist us from the first dives to the final bibliography.

One of the missions of contemporary artificial intelligence is to help us find, sort and digest information, especially with the help of large language models. These systems have come at a time when we most need to mana…

PET technologies: how to use protected data in a privacy-sensitive way

As organisations seek to harness the potential of data to make decisions, innovate and improve their services, a fundamental challenge arises: how can data collection and use be balanced with respect for privacy? PET technologies attempt to address this challenge. In this post, we will explore what…

Open source auto machine learning tools

The increasing complexity of machine learning models and the need to optimise their performance has been driving the development of AutoML (Automated Machine Learning) for years. This discipline seeks to automate key tasks in the model development lifecycle, such as algorithm selection, data process…

The impact of open data along its value chain: Indicators and future directions

The transformative potential of open data initiatives is now widely recognised as they offer opportunities for fostering innovation, greater transparency and improved efficiency in many processes. However, reliable measurement of the real impact of these initiatives is difficult to obtain.

From this…

What is the value of open geographic data?

Geographic data allow us to learn about the world around us. From locating optimal travel routes to monitoring natural ecosystems, from urban planning and development to emergency management, geographic data has great potential to drive development and efficiency in multiple economic and social area…

Re3gistry: facilitating the semantic interoperability of data

The INSPIRE (Infrastructure for Spatial Information in Europe) Directive sets out the general rules for the establishment of an Infrastructure for Spatial Information in the European Community based on the Infrastructures of the Member States. Adopted by the European Parliament a…

Legal implications of open data and re-use of public sector information for ChatGPT

The emergence of artificial intelligence (AI), and ChatGPT in particular, has become one of the main topics of debate in recent months. This tool has even eclipsed other emerging technologies that had gained prominence in a wide range of fields (legal, economic, social and cultural). This is t…

Vinalod: The tool to make open datasets more accessible

Public administration is working to ensure access to open data, in order to empowering citizens in their right to information. Aligned with this objective, the European open data portal (data.europa.eu) references a large volume of data on a variety of topics.

However, although the data belong to di…

Free tools to work on data quality issues

Ensuring data quality is an essential task for any open data initiative. Before publication, datasets need to be validated to check that they are free of errors, duplication, etc. In this way, their potential for re-use will grow.

Data quality is conditioned by many aspects. In this sense, the Aport…

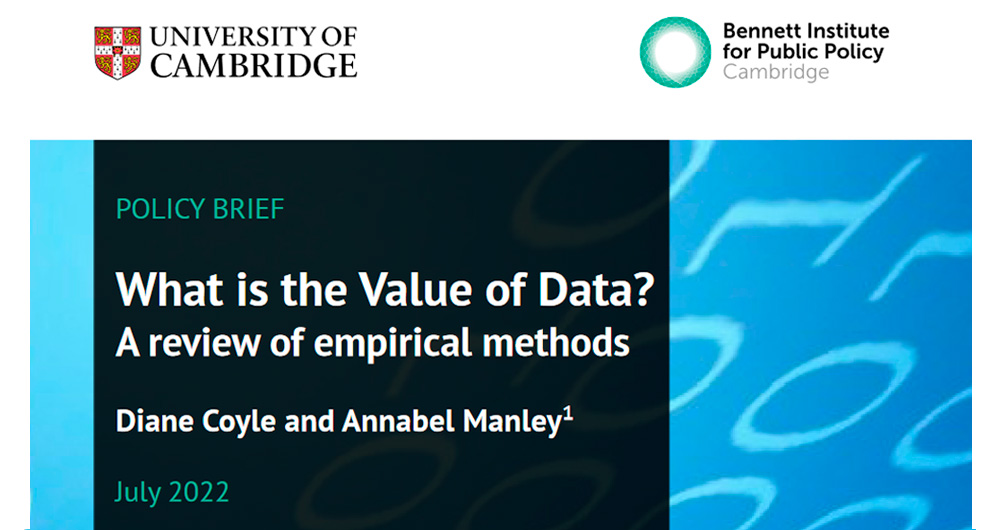

Quantifying the value of data

There is a recurring question that has been around since the beginning of the open data movement, and as efforts and investments in data collection and publication have increased, it has resonated more and more strongly: What is the value of a dataset?

This is an extremely difficult question to answ…