15 posts found

AI tools for research and a new way to use language models

AI systems designed to assist us from the first dives to the final bibliography.

One of the missions of contemporary artificial intelligence is to help us find, sort and digest information, especially with the help of large language models. These systems have come at a time when we most need to mana…

PET technologies: how to use protected data in a privacy-sensitive way

As organisations seek to harness the potential of data to make decisions, innovate and improve their services, a fundamental challenge arises: how can data collection and use be balanced with respect for privacy? PET technologies attempt to address this challenge. In this post, we will explore what…

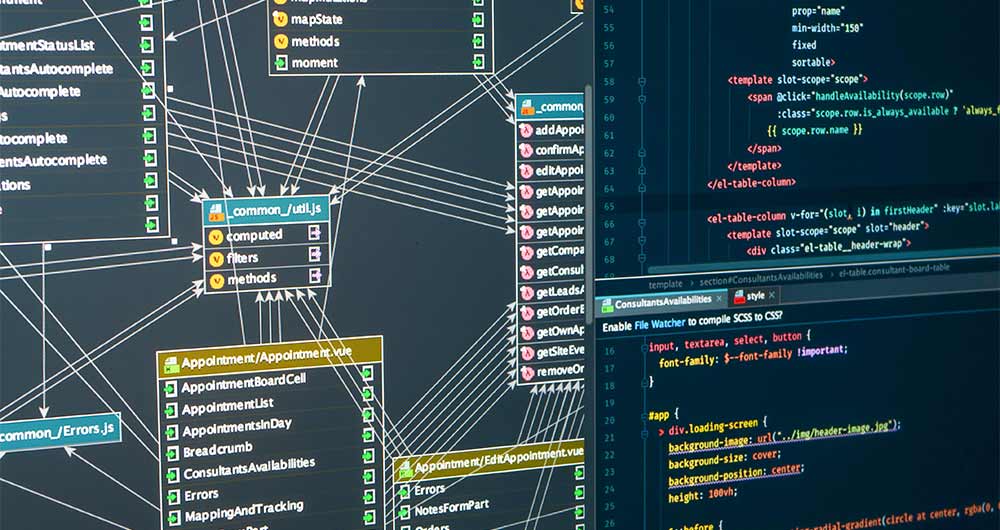

Open source auto machine learning tools

The increasing complexity of machine learning models and the need to optimise their performance has been driving the development of AutoML (Automated Machine Learning) for years. This discipline seeks to automate key tasks in the model development lifecycle, such as algorithm selection, data process…

The future of privacy in a world dominated by open data

In the era dominated by artificial intelligence that we are just beginning, open data has rightfully become an increasingly valuable asset, not only as a support for transparency but also for the progress of innovation and technological development in general.

The opening of data has brought enormou…

Re3gistry: facilitating the semantic interoperability of data

The INSPIRE (Infrastructure for Spatial Information in Europe) Directive sets out the general rules for the establishment of an Infrastructure for Spatial Information in the European Community based on the Infrastructures of the Member States. Adopted by the European Parliament a…

Vinalod: The tool to make open datasets more accessible

Public administration is working to ensure access to open data, in order to empowering citizens in their right to information. Aligned with this objective, the European open data portal (data.europa.eu) references a large volume of data on a variety of topics.

However, although the data belong to di…

Common misunderstandings in data anonymisation

Data anonymisation is a complex process and often prone to misunderstandings. In the worst case, these misconceptions lead to data leakage, directly affecting the guarantees that should be offered to users regarding their privacy.

Anonymisation aims at rendering data anonymous, avoiding the re-ident…

Free tools to work on data quality issues

Ensuring data quality is an essential task for any open data initiative. Before publication, datasets need to be validated to check that they are free of errors, duplication, etc. In this way, their potential for re-use will grow.

Data quality is conditioned by many aspects. In this sense, the Aport…

Hackathons, a new way of attracting talent

Technology is now an essential component of our daily lives. It is no secret that a large number of companies worldwide have been making significant investments in order to digitize their processes, products or services and thus offer greater innovation in them.

All this has led to an increase in th…

The importance of anonymization and data privacy

We are in a historical moment, where data has become a key asset for almost any process in our daily lives. There are more and more ways to collect data and more capacity to process and share it, where new technologies such as IoT, Blockchain, Artificial Intelligence, Big Data and Linked Data play a…