7 posts found

10 principles for web development and web API design

Web API design is a fundamental discipline for the development of applications and services, facilitating the fluid exchange of data between different systems. In the context of open data platforms, APIs are particularly important as they allow users to access the information they need automatically…

Safe rooms in Spain: What kind of data can researchers access?

There are a number of data that are very valuable, but which by their nature cannot be opened to the public at large. These are confidential data which are subject to third party rights that prevent them from being made available through open platforms, but which may be essential for research that p…

The agreement to provide statistical data to researchers, in the context of the Data Governance Regulation

The European Union has devised a fundamental strategy to ensure accessible and reusable data for research, innovation and entrepreneurship. Strategic decisions have been made both in a regulatory and in a material sense to build spaces for data sharing and to foster the emergence of intermediar…

GeoParquet 1.0.0: new format for more efficient access to spatial data

Cloud data storage is currently one of the fastest growing segments of enterprise software, which is facilitating the incorporation of a large number of new users into the field of analytics.

As we introduced in a previous post, a new format, Parquet, has among its…

Chat GPT-3 API The Gateway to Integrations

We continue with the series of posts about Chat GPT-3. The expectation raised by the conversational system more than justifies the publication of several articles about its features and applications. In this post, we take a closer look at one of the latest news published by openAI related to Chat GP…

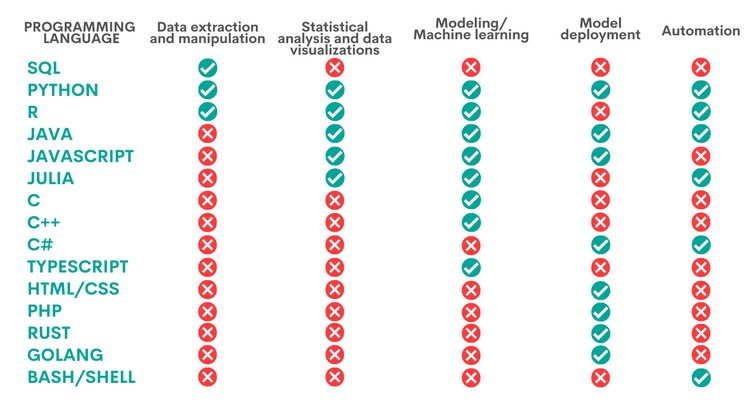

When to use each programming language in data science?

Python, R, SQL, JavaScript, C++, HTML... Nowadays we can find a multitude of programming languages that allow us to develop software programmes, applications, web pages, etc. Each one has unique characteristics that differentiate it from the rest and make it more appropriate for certain tasks. But h…

Why should you use Parquet files if you process a lot of data?

It's been a long time since we first heard about the Apache Hadoop ecosystem for distributed data processing. Things have changed a lot since then, and we now use higher-level tools to build solutions based on big data payloads. However, it is important to highlight some best practices related to ou…