22 posts found

The rise of predictive commerce: open data to anticipate needs

In a world where immediacy is becoming increasingly important, predictive commerce has become a key tool for anticipating consumer behaviors, optimizing decisions, and offering personalized experiences. It's no longer just about reacting to the customer's needs, it's about predicting what they…

How to ensure the authenticity of satellite imagery

Synthetic images are visual representations artificially generated by algorithms and computational techniques, rather than being captured directly from reality with cameras or sensors. They are produced from different methods, among which the antagonistic generative networks (Generative Adversarial…

Altruistic projects to create AI models in co-official languages

Artificial intelligence (AI) assistants are already part of our daily lives: we ask them the time, how to get to a certain place or we ask them to play our favorite song. And although AI, in the future, may offer us infinite functionalities, we must not forget that linguistic diversity is still a pe…

Federated machine learning: generating value from shared data while maintaining privacy

Data is a fundamental resource for improving our quality of life because it enables better decision-making processes to create personalised products and services, both in the public and private sectors. In contexts such as health, mobility, energy or education, the use of data facilitates more effic…

ALIA and foundational models What are they and why are they key to the future of AI?

The enormous acceleration of innovation in artificial intelligence (AI) in recent years has largely revolved around the development of so-called "foundational models". Also known as Large [X] Models (Large [X] Models or LxM), Foundation Models, as defined by the Center for Research on Foundation Mod…

Using Pandas for quality error reduction in data repositories

There is no doubt that data has become the strategic asset for organisations. Today, it is essential to ensure that decisions are based on quality data, regardless of the alignment they follow: data analytics, artificial intelligence or reporting. However, ensuring data repositories with high levels…

Understanding Word Embeddings: how machines learn the meaning of words

Natural language processing (NLP) is a branch of artificial intelligence that allows machines to understand and manipulate human language. At the core of many modern applications, such as virtual assistants, machine translation and chatbots, are word embeddings. But what exactly are they and why are…

Open science and information systems for research

The European Open Science Cloud (EOSC) is a European Union initiative that aims to promote open science through the creation of an open, collaborative and sustainabledigital research infrastructure. EOSC's main objective is to provide European researchers with easier access to the data, tools and re…

The relevance of open data for medical research: The Case of FISABIO Foundation

In the digital age, technological advancements have transformed the field of medical research. One of the factors contributing to technological development in this area is data, particularly open data. The openness and availability of information obtained from health research provide multiple benefi…

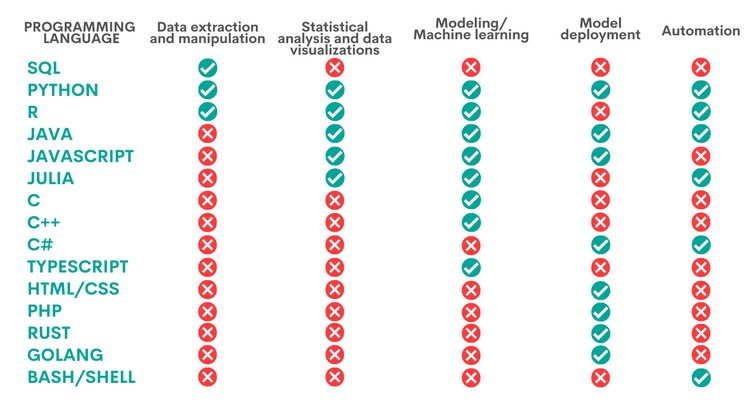

When to use each programming language in data science?

Python, R, SQL, JavaScript, C++, HTML... Nowadays we can find a multitude of programming languages that allow us to develop software programmes, applications, web pages, etc. Each one has unique characteristics that differentiate it from the rest and make it more appropriate for certain tasks. But h…