8 posts found

10 principles for web development and web API design

Web API design is a fundamental discipline for the development of applications and services, facilitating the fluid exchange of data between different systems. In the context of open data platforms, APIs are particularly important as they allow users to access the information they need automatically…

Data Sandboxes: Exploring the potential of open data in a secure environment

Data sandboxes are tools that provide us with environments to test new data-related practices and technologies, making them powerful instruments for managing and using data securely and effectively. These spaces are very useful in determining whether and under what conditions it is feasibl…

GeoParquet 1.0.0: new format for more efficient access to spatial data

Cloud data storage is currently one of the fastest growing segments of enterprise software, which is facilitating the incorporation of a large number of new users into the field of analytics.

As we introduced in a previous post, a new format, Parquet, has among its…

Chat GPT-3 API The Gateway to Integrations

We continue with the series of posts about Chat GPT-3. The expectation raised by the conversational system more than justifies the publication of several articles about its features and applications. In this post, we take a closer look at one of the latest news published by openAI related to Chat GP…

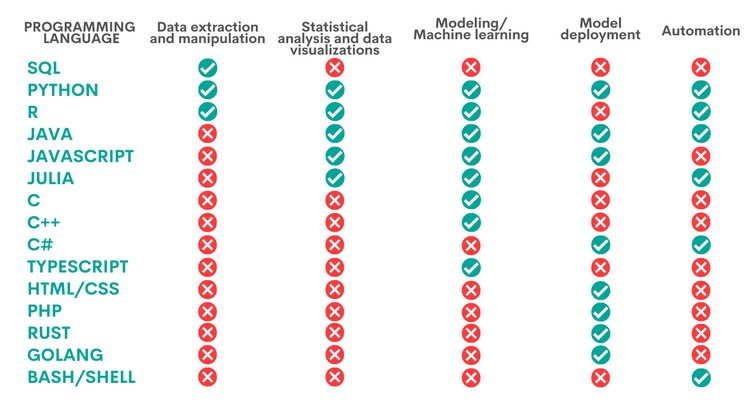

When to use each programming language in data science?

Python, R, SQL, JavaScript, C++, HTML... Nowadays we can find a multitude of programming languages that allow us to develop software programmes, applications, web pages, etc. Each one has unique characteristics that differentiate it from the rest and make it more appropriate for certain tasks. But h…

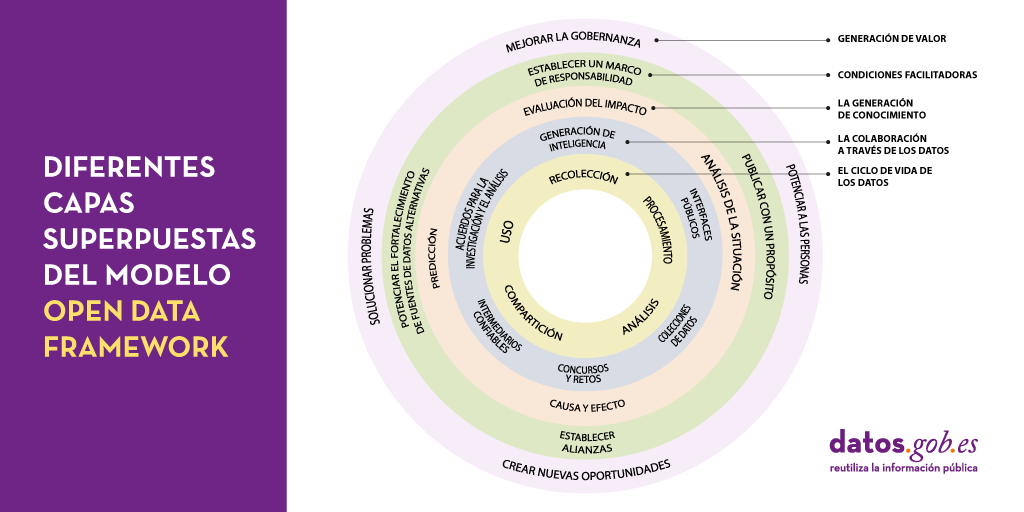

Peeling the onion of open data governance

One of the key actions that we recently highlighted as necessary to build the future of open data in our country is the implementation of processes to improve data management and governance. It is no coincidence that proper data management in our organisations is becoming an increasingly complex and…

Why should you use Parquet files if you process a lot of data?

It's been a long time since we first heard about the Apache Hadoop ecosystem for distributed data processing. Things have changed a lot since then, and we now use higher-level tools to build solutions based on big data payloads. However, it is important to highlight some best practices related to ou…

Laboratories for innovation in data management

Current approaches to public policy-making that respond quickly to changing trends in technology are too often unsuccessful. Policy makers are often pressured to develop and adopt laws or guidelines without the evidence needed to do so safely and without the opportunity to consult affected experts a…